운영하기 더 편한 cluster를 찾아서 이번엔 kubespray를 설치해봅니다.

(처음엔 centos + ubuntu로 하였으나, ubuntu로 통일했습니다.)

cluster 총 3대의 pc 스펙은 아래와 같습니다.

| os | node | cpu-core | gpu | ram (GB) | |

| Ubuntu 22.04 | master | 8 | 3080 | 64 | playbook |

| Ubuntu 22.10 | master | 8 | 3070 | 16 | playbook |

| Ubuntu 22.10 | worker | 4 | - | 12 | playbook |

| macOS ventura 13.0.1 | ansible 실행자 |

하드웨어 검색

sudo apt install hwinfo -y

disabled memory swap - ALL node

sudo swapoff -a

enabled ip forward - ALL node

sudo sh -c 'echo 1 > /proc/sys/net/ipv4/ip_forward'

cat /proc/sys/net/ipv4/ip_forwardadd master/worker in hosts - ALL node

sudo vim /etc/hosts

192.168.32.21 master1

192.168.32.180 master2

192.168.32.205 worker1

install python, pip - ALL node

sudo apt install python3.10 python3-pip -y

sudo update-alternatives --install /usr/bin/python python /usr/bin/python3.10 1

python --version

install docker ubuntu 22.10

sudo apt-get install ca-certificates curl gnupg lsb-release -y

# add docker's dofficial GPG key

sudo mkdir -p /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

# install docker engine

sudo apt-get update

sudo chmod a+r /etc/apt/keyrings/docker.gpg

sudo apt-get update

# install docker engine, containerd

sudo apt-get install docker-ce docker-ce-cli containerd.io -y

# check

docker ps

bootstrap ansible 실행(제 기준으로는 mac입니다.)

여기서부터 ansible로 playbook에 ssh로 접근하여 kubespray를 각 노드에 설치하는 과정입니다.

copy SSH key

ssh-keygen -t rsa -b 2048

cat id_rsa.pub

# -i [ssh-key file] [host server]

ssh-copy-id -i ~/.ssh/id_rsa.pub 192.186.33.20

ssh-copy-id -i ~/.ssh/id_rsa.pub 192.186.33.21

ssh-copy-id -i ~/.ssh/id_rsa.pub 192.186.33.22

install ansible

brew install ansible

clone kubespray

git clone https://github.com/kubernetes-sigs/kubespray.git

cd kubespray

git checkout release-2.20

# pip install

# pip install ansible==2.10.7 # 버전 오류 발생으로 필요한 경우 실행

pip3 install -r requirements.txt

# copy my invenroty config

cp -rfp inventory/sample inventory/cluster

modify inventory.ini

# vim inventory/cluster/inventory.ini

# ## Configure 'ip' variable to bind kubernetes services on a

# ## different ip than the default iface

# ## We should set etcd_member_name for etcd cluster. The node that is not a etcd member do not need to set the value, or can set the empty string value.

[all]

master1 ansible_host=192.168.31.21 ip=192.168.31.21 etcd_member_name=etcd1 ansible_port=5678 ansible_user=ps

master2 ansible_host=192.168.31.180 ip=192.168.31.180 etcd_member_name=etcd2 ansible_port=5678 ansible_user=ps

node1 ansible_host=192.168.31.205 ip=192.168.31.205 etcd_member_name-etcd3 ansible_port=5678 ansible_user=ps

# node4 ansible_host=95.54.0.15 # ip=10.3.0.4 etcd_member_name=etcd4

# node5 ansible_host=95.54.0.16 # ip=10.3.0.5 etcd_member_name=etcd5

# node6 ansible_host=95.54.0.17 # ip=10.3.0.6 etcd_member_name=etcd6

# ## configure a bastion host if your nodes are not directly reachable

# [bastion]

# bastion ansible_host=x.x.x.x ansible_user=some_user

[kube_control_plane]

master1

master2

# node3

[etcd]

master1

master2

node1

[kube_node]

node1

# node3

# node4

# node5

# node6

[calico_rr]

[k8s_cluster:children]

kube_control_plane

kube_node

calico_rr

# replace all on vim

:%s/host/ps.k8smaster80.org

:%s/host/ps.k8smaster70.org

:%s/host/ps.k8sworker.org-> vim 안에서 replace all 하기

저는 이전에 해당 서버들로 비밀번호 접근은 막았기 때문에 ansible_port 설정을 추가했습니다.

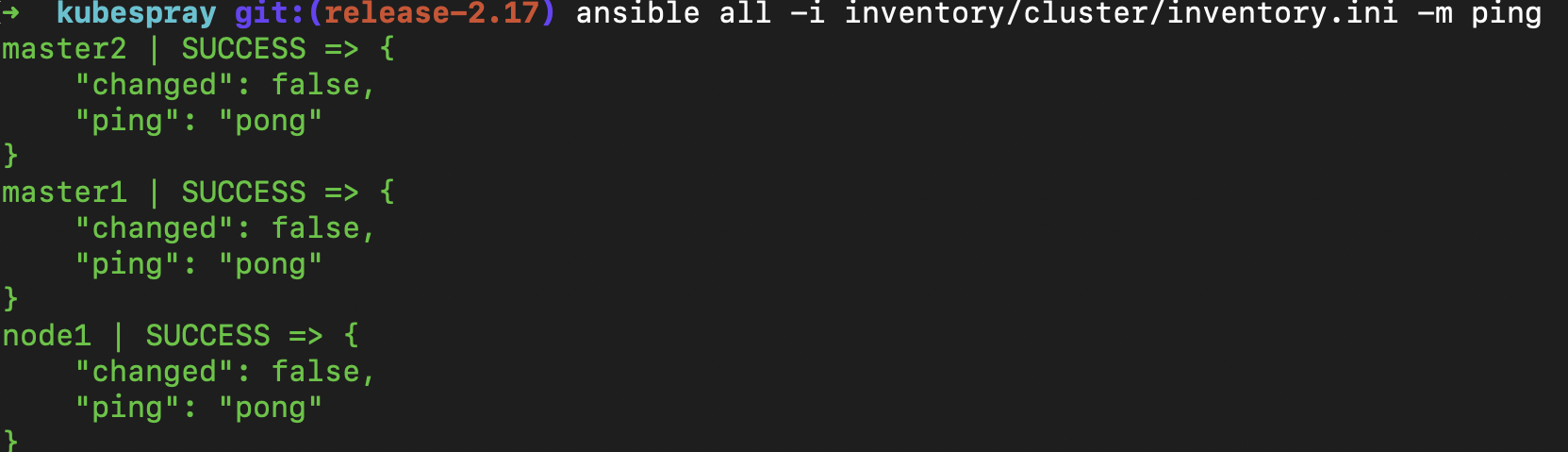

연결 확인하기

ansible all -i inventory/cluster/inventory.ini -m ping

enabled addon

# sudo vim ./inventory/mycluster/group_vars/k8s_cluster/addons.yml

dashboard_enabled: true

metrics_server_enabled: true

ingress_nginx_enabled: true

# sudo vim ./inventory/mycluster/group_vars/k8s_cluster/addons.yml---

# Kubernetes dashboard

# RBAC required. see docs/getting-started.md for access details.

# dashboard_enabled: false

# Helm deployment

helm_enabled: false

# Registry deployment

registry_enabled: false

# registry_namespace: kube-system

# registry_storage_class: ""

# registry_disk_size: "10Gi"

# Metrics Server deployment

metrics_server_enabled: true

# metrics_server_resizer: false

# metrics_server_kubelet_insecure_tls: true

# metrics_server_metric_resolution: 15s

# metrics_server_kubelet_preferred_address_types: "InternalIP"

# Rancher Local Path Provisioner

local_path_provisioner_enabled: false

# local_path_provisioner_namespace: "local-path-storage"

# local_path_provisioner_storage_class: "local-path"

# local_path_provisioner_reclaim_policy: Delete

# local_path_provisioner_claim_root: /opt/local-path-provisioner/

# local_path_provisioner_debug: false

# local_path_provisioner_image_repo: "rancher/local-path-provisioner"

# local_path_provisioner_image_tag: "v0.0.19"

# local_path_provisioner_helper_image_repo: "busybox"

# local_path_provisioner_helper_image_tag: "latest"

# Local volume provisioner deployment

local_volume_provisioner_enabled: false

# local_volume_provisioner_namespace: kube-system

# local_volume_provisioner_nodelabels:

# - kubernetes.io/hostname

# - topology.kubernetes.io/region

# - topology.kubernetes.io/zone

# local_volume_provisioner_storage_classes:

# local-storage:

# host_dir: /mnt/disks

# mount_dir: /mnt/disks

# volume_mode: Filesystem

# fs_type: ext4

# fast-disks:

# host_dir: /mnt/fast-disks

# mount_dir: /mnt/fast-disks

# block_cleaner_command:

# - "/scripts/shred.sh"

# - "2"

# volume_mode: Filesystem

# fs_type: ext4

# CSI Volume Snapshot Controller deployment, set this to true if your CSI is able to manage snapshots

# currently, setting cinder_csi_enabled=true would automatically enable the snapshot controller

# Longhorn is an extenal CSI that would also require setting this to true but it is not included in kubespray

# csi_snapshot_controller_enabled: false

# CephFS provisioner deployment

cephfs_provisioner_enabled: false

# cephfs_provisioner_namespace: "cephfs-provisioner"

# cephfs_provisioner_cluster: ceph

# cephfs_provisioner_monitors: "172.24.0.1:6789,172.24.0.2:6789,172.24.0.3:6789"

# cephfs_provisioner_admin_id: admin

# cephfs_provisioner_secret: secret

# cephfs_provisioner_storage_class: cephfs

# cephfs_provisioner_reclaim_policy: Delete

# cephfs_provisioner_claim_root: /volumes

# cephfs_provisioner_deterministic_names: true

# RBD provisioner deployment

rbd_provisioner_enabled: false

# rbd_provisioner_namespace: rbd-provisioner

# rbd_provisioner_replicas: 2

# rbd_provisioner_monitors: "172.24.0.1:6789,172.24.0.2:6789,172.24.0.3:6789"

# rbd_provisioner_pool: kube

# rbd_provisioner_admin_id: admin

# rbd_provisioner_secret_name: ceph-secret-admin

# rbd_provisioner_secret: ceph-key-admin

# rbd_provisioner_user_id: kube

# rbd_provisioner_user_secret_name: ceph-secret-user

# rbd_provisioner_user_secret: ceph-key-user

# rbd_provisioner_user_secret_namespace: rbd-provisioner

# rbd_provisioner_fs_type: ext4

# rbd_provisioner_image_format: "2"

# rbd_provisioner_image_features: layering

# rbd_provisioner_storage_class: rbd

# rbd_provisioner_reclaim_policy: Delete

# Nginx ingress controller deployment

ingress_nginx_enabled: true

# ingress_nginx_host_network: false

ingress_publish_status_address: ""

# ingress_nginx_nodeselector:

# kubernetes.io/os: "linux"

# ingress_nginx_tolerations:

# - key: "node-role.kubernetes.io/master"

# operator: "Equal"

# value: ""

# effect: "NoSchedule"

# - key: "node-role.kubernetes.io/control-plane"

# operator: "Equal"

# value: ""

# effect: "NoSchedule"

# ingress_nginx_namespace: "ingress-nginx"

# ingress_nginx_insecure_port: 80

# ingress_nginx_secure_port: 443

# ingress_nginx_configmap:

# map-hash-bucket-size: "128"

# ssl-protocols: "TLSv1.2 TLSv1.3"

# ingress_nginx_configmap_tcp_services:

# 9000: "default/example-go:8080"

# ingress_nginx_configmap_udp_services:

# 53: "kube-system/coredns:53"

# ingress_nginx_extra_args:

# - --default-ssl-certificate=default/foo-tls

# ingress_nginx_class: nginx

# ambassador ingress controller deployment

ingress_ambassador_enabled: false

# ingress_ambassador_namespace: "ambassador"

# ingress_ambassador_version: "*"

# ingress_ambassador_multi_namespaces: false

# ALB ingress controller deployment

ingress_alb_enabled: false

# alb_ingress_aws_region: "us-east-1"

# alb_ingress_restrict_scheme: "false"

# Enables logging on all outbound requests sent to the AWS API.

# If logging is desired, set to true.

# alb_ingress_aws_debug: "false"

# Cert manager deployment

cert_manager_enabled: false

# cert_manager_namespace: "cert-manager"

# MetalLB deployment

metallb_enabled: false

metallb_speaker_enabled: true

# metallb_ip_range:

# - "10.5.0.50-10.5.0.99"

# metallb_speaker_nodeselector:

# kubernetes.io/os: "linux"

# metallb_controller_nodeselector:

# kubernetes.io/os: "linux"

# metallb_speaker_tolerations:

# - key: "node-role.kubernetes.io/master"

# operator: "Equal"

# value: ""

# effect: "NoSchedule"

# - key: "node-role.kubernetes.io/control-plane"

# operator: "Equal"

# value: ""

# effect: "NoSchedule"

# metallb_controller_tolerations:

# - key: "node-role.kubernetes.io/master"

# operator: "Equal"

# value: ""

# effect: "NoSchedule"

# - key: "node-role.kubernetes.io/control-plane"

# operator: "Equal"

# value: ""

# effect: "NoSchedule"

# metallb_version: v0.10.2

# metallb_protocol: "layer2"

# metallb_port: "7472"

# metallb_memberlist_port: "7946"

# metallb_additional_address_pools:

# kube_service_pool:

# ip_range:

# - "10.5.1.50-10.5.1.99"

# protocol: "layer2"

# auto_assign: false

# metallb_protocol: "bgp"

# metallb_peers:

# - peer_address: 192.0.2.1

# peer_asn: 64512

# my_asn: 4200000000

# - peer_address: 192.0.2.2

# peer_asn: 64513

# my_asn: 4200000000

# The plugin manager for kubectl

krew_enabled: false

krew_root_dir: "/usr/local/krew"

설정 변경

cd ~/kubespraycontainerd_ubuntu_repo_component: “stable” -> latest

vim roles/container-engine/containerd/defaults/main.yml

docker_version: 'latest' # 20.04 -> latest

vim roles/container-engine/docker/defaults/main.yml

stable -> latest

vim roles/container-engine/docker/vars/ubuntu.ymldocker_repo_info:

repos:

->

deb [arch={{ host_architecture }}] {{ docker_ubuntu_repo_base_url }}

{{ ansible_distribution_release|lower }}

latest

nf_conntrack_ipv4 -> nf_contrack

vim roles/kubernetes/node/tasks/main.yml%s/nf_conntrack_ipv4/nf_conntrack

remove aufs-tools

vim roles/kubernetes/preinstall/vars/ubuntu.yml---

required_pkgs:

- python3-apt

- apt-transport-https

- software-properties-common

- conntrack

- apparmor

run play-book

ansible-playbook cluster.yml -i inventory/cluster/inventory.ini --become --become-user=root --extra-vars "ansible_sudo_pass=mypassword"

reset

ansible-playbook -i inventory/cluster/inventory.ini -b --become-user=ps reset.yml --extra-vars "ansible_sudo_pass=mypassword"issue

- No package matching 'aufs-tools' is available

- aufs-tools 설정값 제거

- docker.io에 필요한 의존성이었으나, ubuntu22.04 이후 docker.io 종속성에서 제거됨.

- static hostname is already set, so the specified transient hostname will not be used.\nCould not set transient hostname: Interactive authentication required.

- extrac-vers 비밀번호 추가

- An exception occurred during task execution. To see the full traceback, use -vvv. The error was: apt_pkg.Error: E:Conflicting values set for option Signed-By regarding source https://download.docker.com/linux/ubuntu/ kinetic: /etc/apt/keyrings/docker.gpg != , E:The list of sources could not be read.

- sudo rm -rf /etc/apt/sources.list.d

- Package containerd.io is not available, but is referred to by another package.

- sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) latest"

- sudo apt-get reinstall docker-ce docker-ce-cli containerd.io

- Dpkg::Options::=--force-confdef\" -o \"Dpkg::Options::=--force-confold\" install 'containerd.io=1.4.9-1'' failed: E: Version '1.4.9-1' for 'containerd.io' was not found

- curl -O https://download.docker.com/linux/debian/dists/buster/pool/stable/amd64/containerd.io_1.4.9-1_amd64.deb

- sudo apt install ./containerd.io_1.4.3-1_amd64.deb

아래 set static ip는 진행하지 않았습니다.

set static ip

- DHCP 설정인 경우 coredns에서 `/etc/resolv.conf`를 참고하면서 pod container에 영향이 감.

- PQDN은 Pod가 다른 pod를 찾을 때 동일 네임스페이스 내에서 찾을 수 있고, QFDN은 네임스페이스 외부에서 찾아야 하는데, 이때 DHCP 영향으로 기대하는 동작을 하지 않을 가능성이 있습니다.(이슈를 만나진 않았습니다.)

PQDN: partially qualified doamin name

FQDN: Fully qualified domain name

ex) https://www.naver.com, https://psawesome.tistory.com

- bold 부분이 호스트이며, 호스트 + 도메인을 모두 명시한 경우 FQDN이라고 칭합니다.

cd /etc/netplan/

sudo cp ./00-installer-config.yaml ./00-installer-config.yaml.20221207

sudo vim ./00-installer-config.yaml

# write

network:

ethernets:

enp0s3:

dhcp4: no

dhcp6: no

addresses: [192.168.32.21/24]

gateway4: 192.168.32.1

nameservers:

addresses: [8.8.8.8,8.8.4.4]

version: 2

kubespray는 실행하고 기도해야 하네요.

'kubernetes' 카테고리의 다른 글

| deploy postgreSQL on kubernetes (0) | 2022.12.20 |

|---|---|

| install kubernetes v1.25.5 on ubuntu 22.10 - kubeadm (0) | 2022.12.17 |

| delete kubernetes all (0) | 2022.10.09 |

| install kubernetes cluster 1.25.2 on local ubuntu, centos (0) | 2022.10.09 |

| 쿠버네티스 버전 낮추기(설정하기) - minikube (0) | 2022.08.15 |